OpenTelemetry OpAMP: Getting Started Guide

Straightforward guide to OpenTelemetry OpAMP Supervisor: what it is, hands-on setup with the opamp-go example, and integrating an OTel collector via Extension and Supervisor.

So you instrumented your application with OpenTelemetry.

You send all your data to a collector and deploy to production.

You now have a single collector for all your telemetry needs. Life is good.

Then things get complicated: you want to collect host metrics using OpenTelemetry, so you deploy another type of collector to each host in your environment.

Now you have two types of collectors running - life is still good.

Then one night you wake up gripped by sudden horror; cold sweat, heart racing.

You just had a nightmare of purple dogs and your APM bill. You decide that enough is enough.

You want to sample your span data.

Now you need new types of collectors: a load-balancing collector and a tail-sampling collector. At this point, you have four different types of collectors running in your infrastructure.

Life is not as good as it used to be.

Suddenly, something breaks - a config fails, a collector restarts because some configuration needs updating, but you have four types of collectors, each with hundreds of instances, all running huge, complex YAML configurations that no one understands.

What do you do?

This is where OpAMP (Open Agent Management Protocol) comes in.

Table of Contents

- What is OpenTelemetry OpAMP?

- How OpAMP fits OpenTelemetry today

- The OpAMP Extension (read-only)

- The OpAMP Supervisor (full remote control)

- Why OpAMP?

- Lawrence makes it better

What is OpenTelemetry OpAMP?

OpAMP is a protocol created by the OpenTelemetry community to help manage large fleets of OTel agents.

OpAMP is primarily a specification, but it also defines and provides an implementation pattern for how agents can communicate remotely and support features like:

- Remote configuration

- Status reporting

- Agent telemetry

- Secure agent updates

So how does OpAMP work?

There are two components: the server and a client. They communicate via HTTP or WebSocket and exchange messages in two formats:

- The server sends a ServerToAgent message.

- The agent sends an AgentToServer message.

message ServerToAgent {

bytes instance_uid = 1;

ServerErrorResponse error_response = 2;

AgentRemoteConfig remote_config = 3;

ConnectionSettingsOffers connection_settings = 4; // Status: [Beta]

PackagesAvailable packages_available = 5; // Status: [Beta]

uint64 flags = 6;

uint64 capabilities = 7;

AgentIdentification agent_identification = 8;

ServerToAgentCommand command = 9; // Status: [Beta]

CustomCapabilities custom_capabilities = 10; // Status: [Development]

CustomMessage custom_message = 11; // Status: [Development]

}message AgentToServer {

bytes instance_uid = 1;

uint64 sequence_num = 2;

AgentDescription agent_description = 3;

uint64 capabilities = 4;

ComponentHealth health = 5;

EffectiveConfig effective_config = 6;

RemoteConfigStatus remote_config_status = 7;

PackageStatuses package_statuses = 8;

AgentDisconnect agent_disconnect = 9;

uint64 flags = 10;

ConnectionSettingsRequest connection_settings_request = 11; // Status: [Development]

CustomCapabilities custom_capabilities = 12; // Status: [Development]

CustomMessage custom_message = 13; // Status: [Development]

}Without getting too nerdy about the protocol spec, this is a flexible structure that also allows us to implement custom functionality with OpAMP.

So we have a protocol that defines how agents and servers can talk to each other. How does it integrate with OpenTelemetry today?

How OpAMP fits OpenTelemetry today

First up is the opamp-go implementation repo.

This is an implementation of the OpAMP spec for both clients and servers, and the examples include a server with a basic UI that we’ll use for this guide.

Ok, we have a server. How do we use it with an existing OTel Collector?

You can integrate OpAMP with the OTel Collector in three increasing levels of capability:

- OpAMP Extension (read‑only)

- OpAMP Supervisor (full remote management)

- OpAMP Bridge (for the OpenTelemetry k8s Operator. Beyond this guide, and we'll create a dedicated guide)

We’ll walk through 1 and 2, step by step.

Prerequisites

To follow along, you'll need:

- Docker - For running containerized collectors

- Go (1.19 or later) - To run the OpAMP server example

That's it! Let's dive into the first integration method.

The OpAMP Extension (read-only)

The OpenTelemetry Collector supports extensions (e.g., the health check extension), and the community created the OpAMP extension.

By nature, extensions have limited capabilities: the OpAMP extension cannot update the collector’s installed packages or its configuration - it’s “read-only” for OpAMP.

It’s useful for status reporting and sending the collector’s config to the OpAMP server. That’s basically it.

To get started, we’ll use the contrib Docker image otel/opentelemetry-collector-contrib for the Collector, and we’ll clone the opamp-go repo and run the example server locally.

Set up the OpAMP server:

git clone https://github.com/open-telemetry/opamp-go.gitcd opamp-go/internal/examples/servergo run main.go

This starts an OpAMP server listening on localhost:4320 for OpAMP agents.

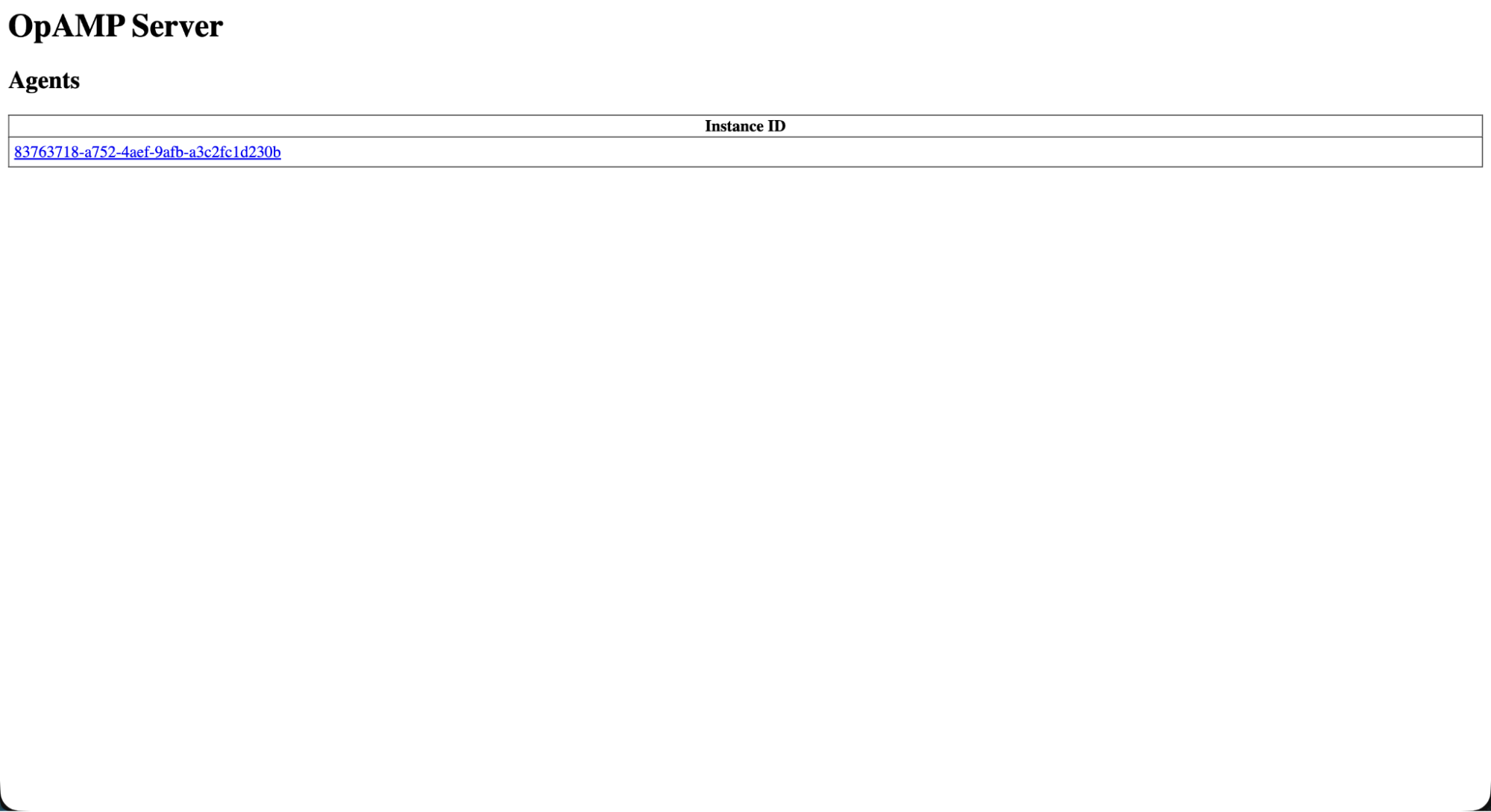

Navigate to localhost:4321 to see the agents page (it will be empty at first).

Now let’s set up an OTel Collector with a basic configuration for the OpAMP extension.

- Create a folder named

opamp-extension - Create a file named

config.yaml - Add any collector config you want to test. In this example, we'll use a simple config with an OTLP receiver and a console (debug) exporter.

- Add the opamp extension in the extensions section.

- Set the server address (ws endpoint) to

host.docker.internal:4320.

172.17.0.1:4320, or add --add-host=host.docker.internal:host-gateway to your docker run command.- Add the extension to the service extensions array.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

processors:

batch:

extensions:

opamp:

server:

ws:

endpoint: ws://host.docker.internal:4320/v1/opamp

capabilities:

reports_effective_config: true

service:

extensions: [opamp]

pipelines:

traces:

receivers: [otlp]

exporters: [debug]

metrics:

receivers: [otlp]

exporters: [debug]

logs:

receivers: [otlp]

processors: [batch]

exporters: [debug]Now, let's run our collector using Docker

docker run -d --name otelcol-opamp \

-v $(pwd)/config.yaml:/etc/otelcol-contrib/config.yaml \

otel/opentelemetry-collector-contrib:latest \

--config=/etc/otelcol-contrib/config.yamlYour collector should now be running and reporting to the OpAMP server.

Go back to the agents page to see it.

You’ll see the collector and the config we set up, running and reporting to OpAMP. This is a good start, but it doesn’t provide all the capabilities we need for production.

This leads us to the second method.

The OpAMP Supervisor (full remote control)

To enable the OTel Collector’s advanced remote management capabilities without changing the collector itself, the community introduced a “supervisor” process.

Instead of managing the otelcol process directly, the supervisor wraps it: you start the supervisor, and it starts (and manages) the collector process.

This pattern gives us great power (which comes, of course, with great responsibility): you can update config, download new packages, stop/restart the process, pull its stdout logs and send them anywhere, and more.

Thankfully, the supervisor has a dedicated Docker image, so setup is straightforward.

To make it even easier, we’ll create a custom container that includes both the supervisor and the collector.

- Create a folder named

opamp-supervisor. - Create a new file named

Dockerfile.

FROM otel/opentelemetry-collector-opampsupervisor:latest as supervisor

# Get OpenTelemetry collector

FROM otel/opentelemetry-collector-contrib:latest AS otel

# Final stage

FROM alpine:latest

# Copy from builder - use the correct source path

COPY /usr/local/bin/opampsupervisor ./opampsupervisor

COPY supervisor.yaml .

COPY collector.yaml .

# Copy from OpenTelemetry

COPY /otelcol-contrib /otelcol-contrib

EXPOSE 4317 4318

ENTRYPOINT ["./opampsupervisor", "--config", "supervisor.yaml"]- Create a file named

supervisor.yaml. - Add the supervisor config.

server:

endpoint: ws://host.docker.internal:4320/v1/opamp

tls:

insecure_skip_verify: true

insecure: true

capabilities:

reports_effective_config: true

reports_own_metrics: true

reports_own_logs: true

reports_health: true

accepts_remote_config: true

reports_remote_config: true

agent:

executable: /otelcol-contrib

config_files:

- /collector.yaml

storage:

directory: /var/lib/opampNote, we’re enabling more capabilities than with the OpAMP extension.

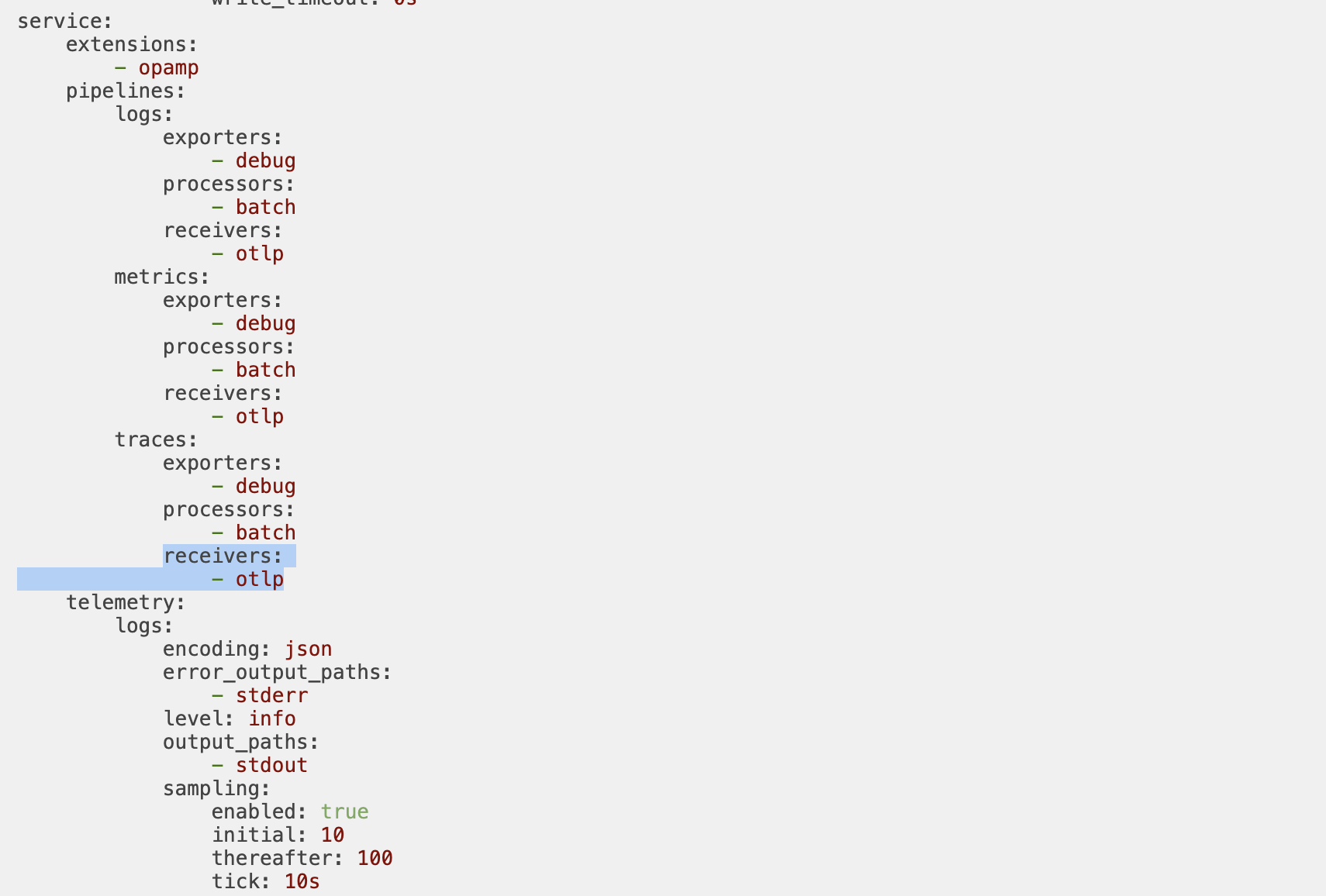

Create a sample collector config similar to before, but without the OpAMP extension (since the supervisor manages it).

Let’s build and run our new Docker container

docker build -t opamp-supervisor . && docker run -d opamp-supervisorVisit the agents page again. You should see the new “supervised” agent.

Now let’s modify the collector’s config remotely.

We’ll add a new exporter and include it in the pipeline. In the “Additional Configuration” editor, add:

exporters:

otlp:

endpoint: localhost:4317

tls:

insecure: true

service:

pipelines:

traces:

receivers: [otlp]The supervisor will attempt to merge this with the existing collector config.

In this case, we’re adding a new OTLP exporter that sends traces to localhost:4317.

Refresh the agent page - you should see the agent’s effective config now includes the new OTLP exporter in the traces pipeline.

At this point, you’ve used the supervisor to change the running collector: you added an OTLP exporter, merged it into the traces pipeline, and saw the effective config reflect the update in the agents page.

From here, you can iterate the same way - add receivers and exporters, tweak processors - directly from the OpAMP server UI and watch the Supervisor apply changes safely.

Why OpAMP?

Back to your awful and vivid nightmare, when you go from one collector to many - different types, hundreds of instances, frequent changes - keeping configs aligned, knowing what’s actually running and what breaks gets extremely challenging.

That’s the core win of OpAMP: centralized, remote control of many collectors with one workflow.

One place to see agents, their health, and their effective config, and to safely roll changes across the fleet from a single UI.

Lawrence makes it better

In this guide, we stuck to the OpenTelemetry available UI. While it’s a great way to understand the concept of OpAMP, in real-world scenarios, you’ll want a more advanced set of capabilities.

Lawrence helps teams configure, visualize, test, and troubleshoot complex OpenTelemetry deployments at scale.

We’re currently in private beta, so if you’re migrating to OpenTelemetry, need to manage a fleet of OTel agents, need advice on OpenTelemetry (we know our stuff), or need a hug, reach out here.